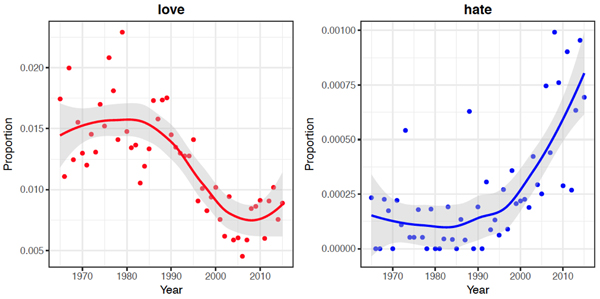

I am interested in the analysis of large, naturally occurring, datasets to investigate (especially modern) human cultural dynamics. “Naturally occurring” datasets are datasets that have not been built on purpose (like surveys, census data, etc.) but represent traces (often digital) of human activities. For example, I used data on dog registrations to analyse whether their popularity is due to individual considerations or to social influence (the latter, it seems), data from Google Books and the Gutenberg project to analyse the change in the expression of emotions through the last centuries, data on baby names and last.fm playlists to detect whether the turnover in popularity of cultural traits can give us some indication of the learning biases involved, or data on folktales, to check whether their complexity is correlated with the size of the population were they are diffused. I recently studied the cultural evolution of emotion words in a large (>150,000 songs) data set of English-language song lyrics and of topics and complexity in Harry Potter fan fiction. This line of work is often close to what is done in digital/computational humanities, or what in sociology is referred to as ‘cultural analytics’. What makes my research cohesive is the usage of methods and theories from cultural evolution to test hypotheses on these data. With a slogan: “big data need big theory”.

Alberto Acerbi

Cultural Evolution / Cognitive Anthropology / Individual-based modelling / Computational Social Science / Digital Media