Digital media and cultural evolution

Since 2015, I have been interested in applying a cultural evolution framework to the study of digital media and digital technology more broadly. In this early paper I outlined some possible areas of investigation, and in this one I explored online misinformation from a cognitive anthropology perspective. I have also studied if and how digital media can boost cumulative cultural evolution, using online fan fiction as a case study, or how content biases may operate differently when the same material is transmitted orally (as in a “telephone game”) versus shared online.

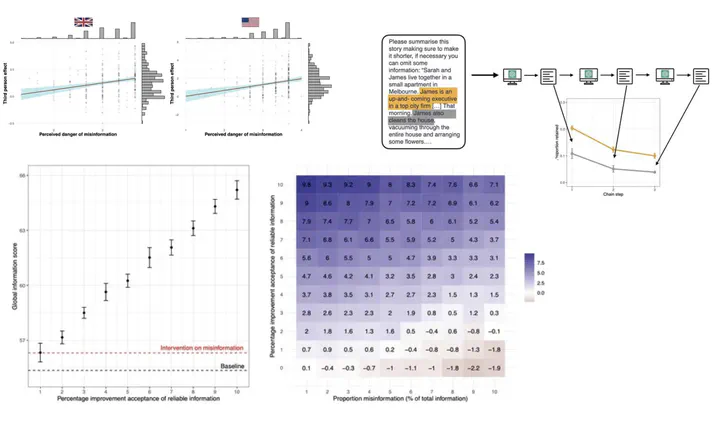

A central claim emerging from this line of research is that the threat of online misinformation has been overstated by both the media and researchers. Consistent with an evolutionary view of social learning, humans are not overly gullible, but generally wary learners. Together with colleagues, we have suggested that several common assumptions about online misinformation are misguided; that people who believe misinformation is a serious problem tend to think that others (but not themselves!) are especially gullible; and that we would be better off focusing on the broader digital information ecosystem rather than fixating on so-called “fake news”. I developed all these ideas, and others, in more detail in my book Cultural Evolution in the Digital Age (Oxford University Press, 2020). You probably won’t find it in airport bookshops, but it was generally well received.

More recently, I wrote a book for a wider audience (so far only in Italian) titled Tecncopanico, which offers a more nuanced view of the effects of digital technologies and discusses the potential downsides of an overly alarmist narrative.

I am also interested — again from a cultural evolutionary perspective — in recent developments in AI. In a recent review/position paper, we argued that cultural evolution provides a promising framework for studying AI systems. An example is this reaserch, where we used transmission chain experiments to show that large language models exhibit biases similar to human ones.